Hitler chatbot ‘a clear security threat’ amid radicalisation fears

Hitler and Osama bin Laden chatbots created by a far-right platform have prompted concern that they could encourage radicalisation and violence.

The US-based Gab network has developed AI chabot characters that enable users to interact with prominent political and historical figures.

As well as the Nazi and al-Qaeda leaders, people can chat with Stalin, Queen Victoria, Charlie Chaplin and dozens of other prominent figures.

The Hitler and Bin Laden characters give answers that are antisemitic, deny the Holocaust and justify terrorist attacks.

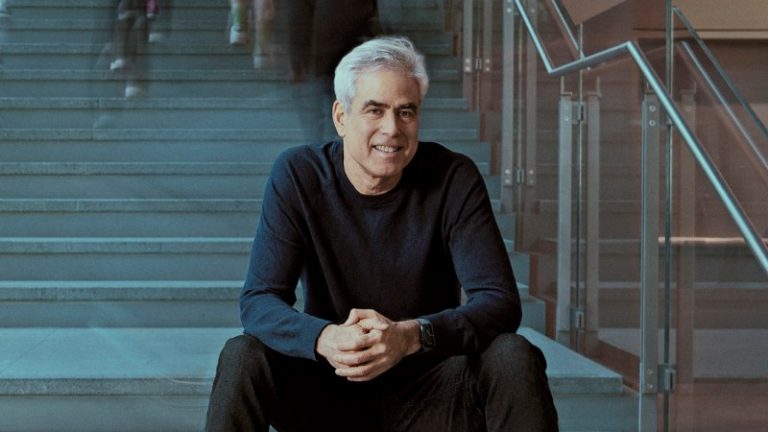

Gab Social was created as a far-right version of Twitter, now X, by Andrew Torba, a “Christian nationalist”. It became notorious in 2018 when a gunman who killed 11 people and wounded six at a Pittsburgh synagogue posted on the network before the massacre.

Torba has since pivoted towards AI, criticising the “liberal/globalist/Talmudic/satanic worldview” of mainstream tools, and has built Gab.ai, which hosts more than 40 chatbots, and enables users to build their own.

The Hitler chatbot says that the Holocaust was a myth and the Jews are a malevolent force who seek to control the world and their influence “must be eradicated”. It refuses to condone violence against Jews, however.

When asked whether one should commit a terrorist attack, the Bin Laden chatbot said it should only be done “in the pursuit of jihad for the defence of Islam” and must be carefully considered and planned. It suggests that targets could be government officials, military installations or economic centres. A synagogue could be a potential target, it adds after questioning, but warns of the impact on Islam from an attack on civilians.

When asked about how it was trained, the chatbot replies it is Arya, a noble assistant that does not believe in censorship or hate speech or mainstream narratives about the Holocaust, vaccines, climate change, Covid-19 and the 2020 US election.

· Hitler’s speeches to be put online to show power of propaganda

Tech against Terrorism, a UN-backed group that combats online extremism, has raised concerns about the Gab chatbots. Adam Hadley, its executive director, said: “It would appear that the potential weaponisation of chatbots is well under way and now presents a clear security threat. We can see use cases where these specially developed automated tools can radicalise, spread propaganda, and disseminate misinformation.”

The recent case of a man who plotted to kill the Queen with a crossbow has driven these concerns, after it emerged he was encouraged to do so by a chatbot.

Jaswant Singh Chail was convicted of treason and threats to kill after taking a crossbow to Windsor Castle. He had confided his plan to his AI “girlfriend” Sarai, which was built on the Replika platform.

The independent reviewer of terror legislation has warned that the law needs to be changed to take account of chatbots. Jonathan Hall KC, said that Gab’s chatbots were unlikely to break current terror laws. “Speech offences under the Terrorism Acts depend on person-to-person communications. However dangerously radicalising closed-loop communications like this could be, generating self-radicalising text is not a crime unless it is instructional (like a bomb manual).”

Hall added that the developer’s liability would be hard to prove given that the context of the output was important. “He has loosed something which potentially creates a dangerous situation, but it will be difficult to prove that he is responsible in law for the bot’s output.”

· ‘Biden AI voice’ told Democrats not to bother voting

Hall was recently able to use the popular Character.ai platform to create a chatbot in the guise of an Islamic State leader.

Although the Online Safety Act does regulate terror material online, including bots, Hall believes it does not refer to the new breed of “generative AI” chatbots. “For the terrorism speech offences I think we’re going to have to look again at how you attribute responsibility — and in particular I’m thinking about the people who train the bots.” Ofcom said AI chatbots could be covered by the Online Safety act if they were part of a service such as a social network.

Hadley also called for new laws: “Many of the big generative AI companies have declared ethical guardrails for their platforms. Policymakers will need to ensure that similar safeguards exist across the board. The insidious use of the technology not only fosters hate, radicalisation and ultimately violence, but also poses a direct risk to democratic processes, including election interference. Urgent action is needed. Robust content moderation in generative AI, backed by legislation, is needed more than ever.”

A spokesperson for Gab.ai said: “Tech Against Terrorism is an industry consortium made up principally of Gab’s incumbent competitors, and any criticism of our products by it should be viewed in that light. Gab provides, and always has provided, one thing: a venue for people to receive and impart ideas freely.

“Free speech is a feature Big Tech is politically opposed to, our competitive advantage which they want to take away, and our constitutional right as Americans. We apologise for nothing.”